理解triplet loss,与给出TensorFlow和numpy两种形式的example code。

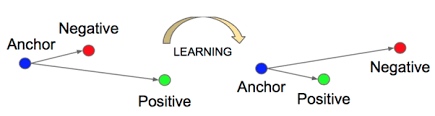

Triplet Loss 是当前应用的很广泛的一种损失函数,在人脸识别和聚类领域,这是一种很自然的映射与计算损失的方式,比如FaceNet里,通过构建一种embedding 方式,将人脸图像直接映射到欧式空间,而优化这种embedding的方法可以概括为,构建许多组三元组(Anchor,Positive,Negative),其中Anchor与Positive同label,Anchor与Negative不同label(在人脸识别里面,就是Anchor与Positive是同一个个体,而与Negative是不同个体),通过学习优化这个embedding,使得欧式空间内的Anchor与Positive 的距离比与Negative的距离要近。

公式表示

用公式表示就是,我们希望:

$$

\left\lVert f(x^a_i) - f(x^p_i) \right\rVert ^2_2 +

\alpha \lt \left\lVert f(x^a_i) - f(x^n_i) \right\rVert ^2_2 , \

\forall (f(x^a_i) , f(x^p_i) , f(x^n_i)) \in \mathscr T

$$

其中$\alpha$ 是强制的正例和负例之间的margin,$\mathscr T$是具有基数为$N$的训练集中的三元组的集合。

那么,损失函数很自然的可以写为:

$$

\sum^N_i

\Bigl [

\left\lVert f(x^a_i) - f(x^p_i) \right\rVert ^2_2 -

\left\lVert f(x^a_i) - f(x^n_i) \right\rVert ^2_2 + \alpha

\Bigr ] _ +

$$

其中加号指的,如果中括号内部分小于0,则没有损失(Anchor与Positive的距离加上margin小于与Negative的距离),否则计算这个距离为损失。

代码表示

Numpy 实现

1 | import numpy as np |

TensorFlow 实现

1 | import tensorflow as tf |

完整代码如下,这里测试对比了两种实现:

1 | import tensorflow as tf |